Benefits of Edge AI in CamThink NeoEyes NE301 Series

As the AI era accelerates, more and more people are joining the technological wave. However, how to use AI more effectively, more safely, and more responsibly has become an important topic. At CamThink, we have always upheld an open-source philosophy and are committed to deploying AI locally on-device to better protect user privacy and further promote the democratization of AI.

Below, we will share in detail the origins of this commitment and why it matters.

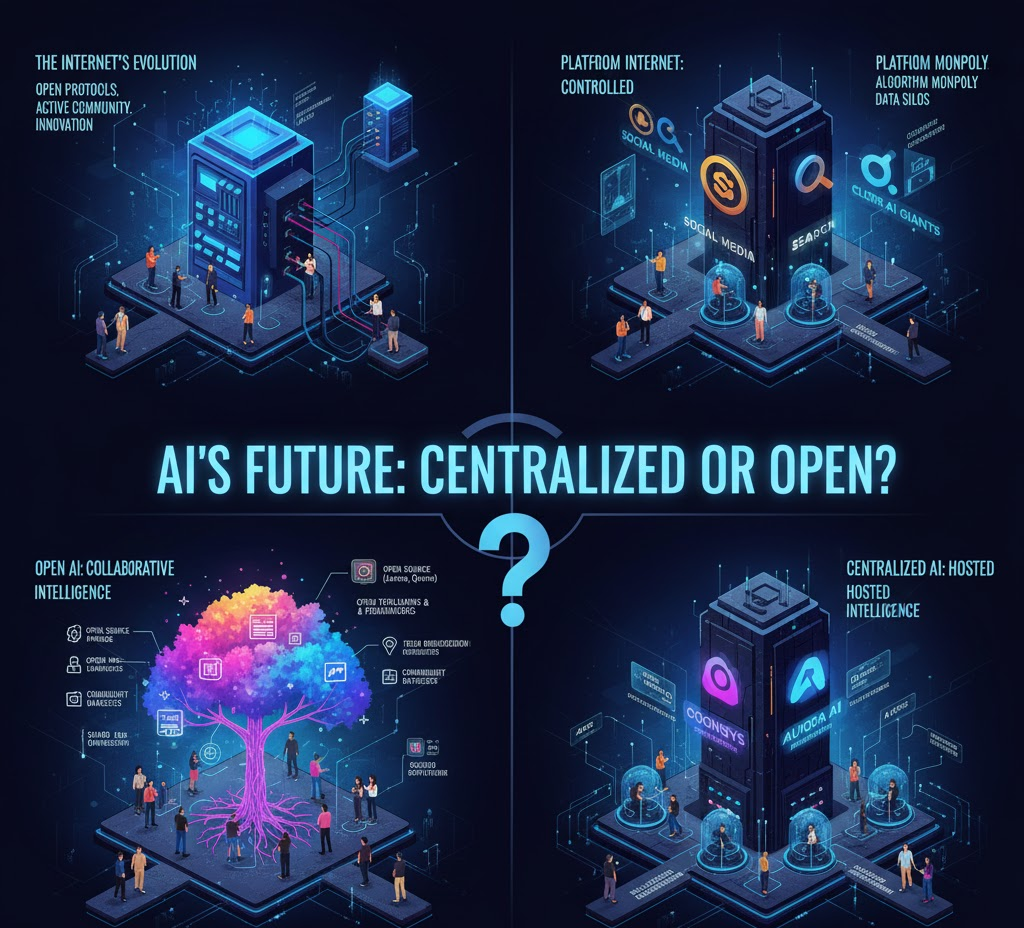

I. Will the Development of AI Trend Towards Centralization?

The Internet was born with the ideal of “open protocols and free information,” but it ultimately became centralized by a few platforms through algorithmic and traffic monopolies, replacing users’ digital sovereignty with the “convenience of centralization.”

Today, AI technology, centered around large models, is driving innovation at an unprecedented speed. However, this “surface prosperity” conceals a concentration of power in the underlying infrastructure: most innovation relies on the models, computing power, and cloud services of a few “Super AIs.”

AI is now repeating the Internet’s history of moving from “decentralization” to “re-centralization,” establishing global innovation atop the infrastructure of a few giants. At the same time, an AI development path centered on “open source and openness” is growing within the community. It advocates for “collective intelligence building” to return innovation to the essence of collaboration and limit the unchecked power of closed-source ecosystems.

We believe the future of AI will be a tension between two forms: the “Hosted Intelligence” dominated by giants and the “Autonomous Intelligence” of open-source collaboration.

But we must ask ourselves: Do we want AI to be a super-system managed by a few, or an open ecosystem built by all humanity?

II. The Fundamental Paradox: The More AI Understands Me, The More My Privacy is at Risk?

The CamThink team’s view is that if AI is truly to deeply integrate into the lives of every individual, it should not be uniformly decided and managed by a handful of platforms under the name of Super AI. We believe that open source is the core driving force. Only by truly localizing AI capabilities to every specific scenario—through models, tools, governance, and the open hardware we are developing—can we follow this path of technological openness. This will allow more people and enterprises to participate in jointly defining, scrutinizing, and using AI. Therefore, we firmly believe: “Openness is the AI future we should actively embrace.”

To understand this judgment, we need to peel back the superficial layers and confront three core logics:

There is a Fundamental Difference Between "Usability" and "Understanding Me"

We must distinguish between two use cases for AI.

The first is tool-based AI, such as translation, code generation, or general text generation. For these needs, users often focus on “is the result accurate?” more than “is the process private?” Using cloud-based supermodels is indeed efficient here.

But the logic fundamentally changes in the second scenario: deep-service “Exclusive AI.” When you need an assistant to organize family schedules, analyze health reports, or serve as an emotional companion, the AI must deeply “understand you.”

The cost of this “understanding” means it must process your diaries, private chat records, financial status, and even biometric features. In this domain, security and privacy are not optional; they are the baseline—the non-negotiable bottom line of digital sovereignty.

If you cannot trust its security, the convenience provided by exclusive service is worthless.

The "Digital Persona Paradox" and Its Cost

We must squarely face this paradox, which concerns everyone’s “Digital Persona”—a unique digital mirror constructed from all your private data.

The more AI deeply understands you (in order to provide exclusive service), the more it needs your private data; and the more this data is concentrated in the centralized cloud, the more your “Digital Persona” is exposed to the outside, and the more fragile your control over it becomes.

In this unequal transaction, closed, centralized AI systems demand that we trade our digital sovereignty for momentary convenience. We might entrust all our life data, from health reports to private communications, to a few giants, essentially placing our sense of security on their promise to “never do evil.” But the lessons of history are cruel: any centralized data vault is not only a massive target for hackers but also inevitably becomes a chip to be exploited and manipulated when commercial interests are maximized.

If the issue of data ownership is not resolved, the smarter and more powerful AI becomes in the future, the more transparent and controlled we, as individuals, will be. Our concern is not the AI technology itself, but the high concentration of its power, as it will possess the ability to define and predict our “Digital Persona.” Ultimately, we may find ourselves living in a highly efficient but unchallenged “digital prison.”

The Correct Path: Controllable AI and Edge Computing

To break the paradox of “understanding me” versus “privacy” being mutually exclusive, we must choose the correct path: data that is entirely localized, with intelligence residing at the device’s edge.

We believe that truly Controllable AI should not be a black box program but an “assistant” running on your local device, bounded by digital ethics.

The value of edge computing fundamentally dismantles the possibility of centralized exploitation through its technical architecture:

Data Localization Eliminates Risk: When your personal data (such as voice, health, and identification data) is processed on the local device, high-value private data is never uploaded to the cloud. This keeps the data “dark” to external systems, completely eliminating the single point of failure and commercialization risk of giants building “data centers.” The more your AI assistant “understands you,” the more securely its knowledge is stored on the device you physically control.

Open Source Transparency Enables Control: Simple local operation is not enough; Edge AI must be combined with open-source models to achieve “Controllable AI.” Open-source models are the cornerstone of trust. They allow the security community and the public to openly audit the code, expose any privacy vulnerabilities or attempts to upload data, thereby constraining the abuse of power at the technical level. At the same time, users regain the power of decision and can prune and customize the model.

This is why the development of Controllable AI, Edge AI, and open-source models is so crucial. Only when computing power sinks to the edge and models are in the hands of the users can we fundamentally achieve “the democratization of intelligence,” dispersing power to every digital citizen, allowing us to enjoy ultimate convenience while maintaining absolute sovereignty over our own data.

III. The Importance of Edge AI Development for AI Equity and Controllability

If we agree that “data sovereignty” is the battleground of the AI era, then Edge AI is the key defense protecting this territory. It concerns not only the choice of technical path but also the ownership and controllable boundaries of future AI development.

Physical Isolation: The Highest Defense Line for Controllable AI

In cloud architecture, data protection relies essentially on the protocol commitment of a “trusted third party.” Edge computing, through physical isolation, truly hands control back to the user. When the AI model runs on a local terminal or in a private environment, the “data never leaves the local environment” operating model eliminates the risk of leakage from remote transmission and cloud storage at the architectural level, providing the highest level of security for sensitive scenarios such as healthcare, finance, and personal services.

Efficiency Breakthroughs: The Practicalization Process of Edge AI

Edge AI is undergoing a critical transition from “proof of concept” to “large-scale application,” signaling that the localization of AI capabilities no longer requires performance compromises. This practicalization is the result of the synergistic evolution of specialized hardware computing power and lightweight, diversified models:

Hardware: High-performance computing extension cards (such as Rockchip RK1820, Hailo10H, etc.) have achieved breakthroughs in energy efficiency ratio with customized memory-compute integration architectures, capable of smoothly running complex large language models. Simultaneously, low-power chips like MCUs integrating NPUs (e.g., STM32N6) are driving the popularization of TinyML.

Models: Small-parameter open-source models like Qwen and Gemma3 are bringing generative AI capabilities to the edge, further compressing model size through techniques like quantization and distillation; while traditional small models like YOLO continue to provide high-efficiency value in specific vertical scenarios.

This synergistic hardware and software development thoroughly resolves the performance concerns of Edge AI, marking our entry into a phase where “computing power is everywhere, and intelligence is within reach.” Data no longer needs to travel far to the cloud; value closure can be completed at the edge.

What Is Needed to Build Edge AI?

Despite the accelerating development of Edge AI, barriers still exist. Current Edge AI remains a minority endeavor, full of complex environment configurations and coding errors. To truly bring Edge AI to the masses, we need to bridge the gap:

Open Hardware Architecture and Flexibility: We need to integrate drivers and protocol barriers from chip manufacturers, pushing hardware design towards greater openness and modularity. This openness is the physical foundation for realizing Controllable AI, granting developers the freedom to choose and customize.

Low-Power Perception and Persistence: True edge intelligence must be “ubiquitous,” meaning devices are often deployed in remote areas with no electricity or network access. We need AI devices to achieve “long-endurance perception” like biological organisms, performing wake-up, high-precision inference, and data collection with extremely low power consumption. Solving the “last mile” power issue is key to embedding AI into every corner of human life.

Lowering Development Barriers and Standardization: Edge computing should not be limited to senior engineers. We need to deeply encapsulate complex drivers, operating systems, and model deployment processes, creating standardized, out-of-the-box solutions. This will truly make “Edge AI simpler.”

This is precisely the strategic core of CamThink: We are committed to building the “Perception + Computing Power + Open Source” Edge AI hardware infrastructure. By providing open hardware architecture and underlying source code, we eliminate the technical black box of Edge AI, allowing more developers of varying skill levels to truly take control of their AI.

IV. The Long Journey of the Open Ecosystem

Despite the growing open-source community, true “AI equality” remains distant. The current AI ecosystem still faces fragmentation issues, such as inconsistent model formats, hardware compatibility challenges, and fragmented application scenarios, leaving most people excluded from AI innovation.

CamThink is committed to being a steadfast “participant” in this transformation. By choosing an open-source, edge computing, and privacy-first approach, we forgo short-term profits in favor of a more challenging but socially valuable path. We are dedicated to contributing core code for free, working to reduce the technical barriers for everyday people to access and develop AI.

We do not seek to become the sole “super giant,” but rather the “super partner” to countless developers and individuals. We believe the future of AI belongs to everyone, and the right to build a free, open, and controllable intelligent world is in our hands.